Last time we talked about the problem of continuously having new data to consider which makes us re-train our model, this time, we will try to see how studies are trying to find a solution by reducing the dimension (X in the last example) of the datasets.

The feature selection for classification can be seen as a combinatorial optimization problem whose objective is to select a subset of n attributes from an initial set of N attributes such as n<N and quality of a solution can be the quality of the classification model constructed using this subset of attributes. The search space contains (2^n) solutions, thus, it is considered as an NP-hard problem, and often approximate methods such as metaheuristics are used to find a good quality solution in a reasonable time.

In a previous work, a metaheuristic based on local search called Bee Swarm Optimization (BSO), was used to solve the feature selection (FS) problem, and to “take you back to when it all first started”

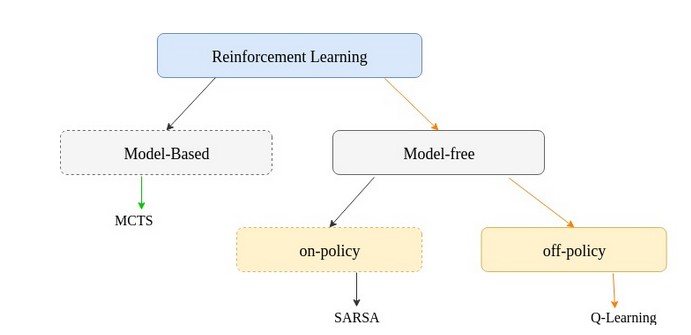

Our project consists of introducing reinforcement learning to this existing method, so first I’m going to briefly present the algorithm, and what could be improved in the local search.

The Bee Swarm Optimization (BSO) metaheuristic is inspired by the foraging behavior of real bees : a bee starts by leaving the hive in order to find a flower and gather nectar. Then, it returns to the hive and unloads the nectar. If the food source is rich, the bee communicates its direction and distance to its mates via a dance. The other bees naturally follow the one that indicates the best food source.

First, an initial solution is generated randomly or via a heuristic. This solution will be the reference solution, refSol, from which a search region, a set of candidate solutions, is determined. After that, each solution is assigned to a bee as a starting point of a local search. At the end of the search, each bee communicates its best found solution to the other ones through a table, named dance.

The problem with the actual definition of best found solution is that we consider a solution as a vector of bits mapped to the actual set of features ( 1 the feature is used , 0 not used ), and the quality of a solution is the classification accuracy returned by the used subset. The problem with that is that we do not consider the effect or contribution of an added/removed feature, and we think that this is a great opportunity to use reinforcement learning methods, and try to find a good policy to keep the most relevant features based on the effect of a subset of features.

If you have any comments, suggestions let me know in the comments, I could really use some help ( in fact, I switched to medium based on a friend’s suggestion, for me it’s all the same )